In your large company, every department wants its own LLM RAG.

Do you create a giant monolith system to handle everything?

A “Prompt Router” might help.

As large companies increasingly adopt Language Model (LLM) Retrieval-Augmented Generation (RAG) chat systems to enhance their operations, a critical question arises: should they develop one monolithic system that indexes documents from all departments into a single RAG system, or should they create separate, smaller RAG systems for each department? Or is there some in-between approach? Let’s look at the pros and cons of each approach, considering the diverse needs of departments such as Engineering, Legal, Finance, HR, and Product Support.

Pros of the Monolith:

- Since it is centralized, there is a concept of information sharing across departments reducing data silos.

- It’s a consistent user experience and may be more cost-effective than building multiple smaller systems.

Cons of the Monolith:

- Security and Access control will be more challenging when sensitive information from different departments becomes commingled.

- Complexity might grow to address the requirements of each department.

Pros of the Department Approach:

- You can customize it with domain-specific vocabulary and documents.

- There is more local control over the data

- You can update it without impacting all other departments

Cons of the Department Approach:

- The user experience might diverge across departments

- Maintaining multiple systems might increase costs.

What might be the “in-between” approach?

One approach might be to allow the user to select the domain they are interested in as part of the single centralized Chat UI, like a drop-down list or menu of more specific areas of interest they wish to query. Examples might be “HR,” “Engineering,” or another department. Then, based on the user’s domain selection and prompt, the system’s program logic could make decisions regarding the vector storage or even the LLM model to process the query.

User selection is probably fine for a small number of possible domains, but at some point, pre-selecting a domain like “HR” or “ENG” seems like an AI anti-pattern.

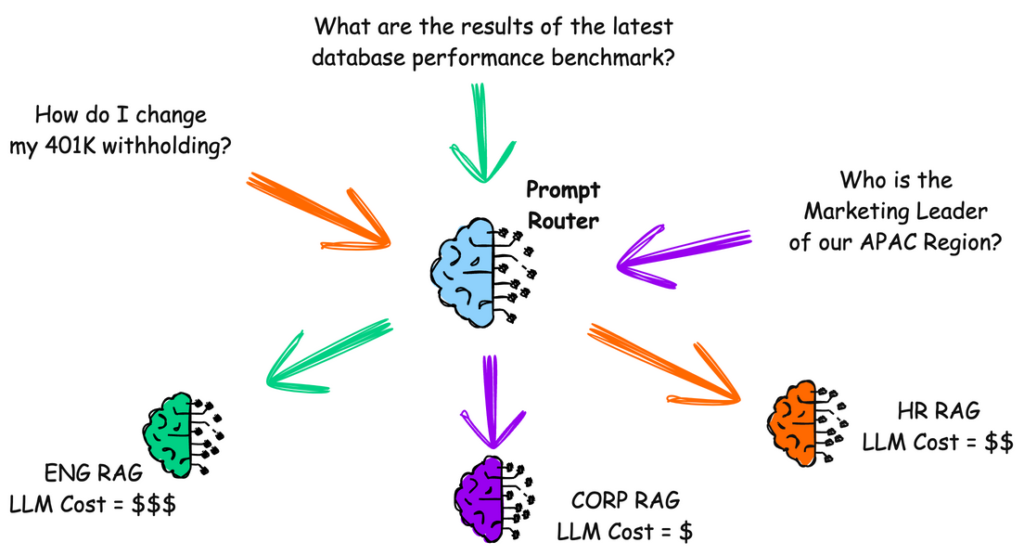

Another approach is what might be called “the prompt router.” Similar to a network packet router, the prompt router would examine the prompt text and use the results as a guide to complete the answer.

In an RAG system with indexed documents or available agents, the prompt router seems more consistent with how popular chat systems work. You ask anything and get a response based on the extent of the LLM training.

Intelligent prompt routing allows you to address each subdomain’s unique needs for an enterprise-scaled multi-departmental RAG implementation. It will enable you to handle custom logic based on the prompt classification.

This link demonstrates how to set up a basic prompt router in Python. It will try to detect which function the prompt should be routed to based on the prompt. If there is no match, then the default LLM receives the prompt. It is simplified code, so it should be easy to follow the flow. It is based on OpenAI “tools,” which trigger a specific function to be called.

https://github.com/oregon-tony/AI-Examples/blob/main/llmrouter.py