One simple setting can change how your LLM responds…

Continue readingMany of us face the crucial challenge of leveraging the power of AI while safeguarding sensitive or private data. The importance of this task cannot be overstated, as the consequences of data exposure can be severe. We’ve all been warned not to send such data to an external LLM, yet most businesses do not have the resources to host a local LLM solution. This is where the technique of ‘data masking’ comes into play, a solution we will explore in this article.

Continue reading

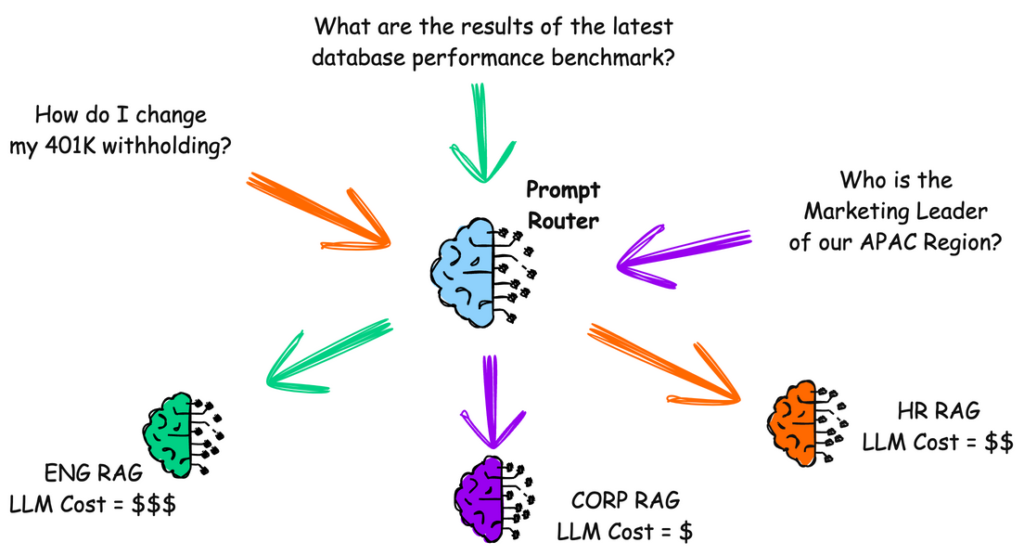

In your large company, every department wants its own LLM RAG.

Do you create a giant monolith system to handle everything?

A “Prompt Router” might help.

Continue reading

How will the application of AI change IT operations? Let’s examine one example and make one prediction for the future.

Continue readingIn the world of LLMs, someone eventually pays for “tokens.” But tokens are not necessarily equivalent to words. Understanding the relationship between words and tokens is critical to grasping how language models like GPT-4 process text.

While a simple word like “cat” may be a single token, a more complex word like “unbelievable” might be broken down into multiple tokens such as “un,” “believ,” and “able.” By converting text into these smaller units, language models can better understand and generate natural language, making them more effective at tasks like translation, summarization, and conversation.

Continue reading

Regression testing ensures that the answers obtained from tests align with the expected results. Whether it’s a ChatBot or Copilot, regression testing is crucial for verifying the accuracy of responses. For instance, in a ChatBot designed for HR queries, consistency in answering questions like “How do I change my withholding percentage on my 401K?” is essential, even after modifying or changing the LLM model or changing the embedding process of input documents.

As professionals working on AI projects, you might find this example of LLM Prompt Injection particularly relevant to your work. I’ve been involved in several AI projects, and I’d like to share one specific instance of LLM Prompt Injection that you can experiment with right away.

With the rapid deployment of AI features in the enterprise, it’s crucial to maintain the overall security of your creations. This example specifically addresses LLM Prompt Injection, one of the many aspects of LLM security.

Continue reading